Causal Support: Modeling Causal Inferences with Visualizations

IEEE Trans. Visualization & Comp. Graphics (Proc. INFOVIS) 2021Abstract

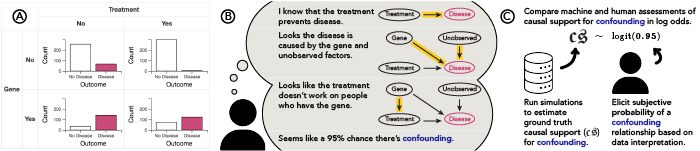

Analysts often make visual causal inferences about possible data-generating models. However, visual analytics (VA) software tends to leave these models implicit in the mind of the analyst, which casts doubt on the statistical validity of informal visual “insights”. We formally evaluate the quality of causal inferences from visualizations by adopting causal support—a Bayesian cognition model that learns the probability of alternative causal explanations given some data—as a normative benchmark for causal inferences. We contribute two experiments assessing how well crowdworkers can detect (1) a treatment effect and (2) a confounding relationship. We find that chart users’ causal inferences tend to be insensitive to sample size such that they deviate from our normative benchmark. While interactively cross-filtering data in visualizations can improve sensitivity, on average users do not perform reliably better with common visualizations than they do with textual contingency tables. These experiments demonstrate the utility of causal support as an evaluation framework for inferences in VA and point to opportunities to make analysts’ mental models more explicit in VA software.

Citation

BibTeX

@article{causal-support-2022,

title = {Causal Support: Modeling Causal Inferences with Visualizations},

author = {Kale, Alex and Wu, Yifan and Hullman, Jessica},

year = 2022,

journal = {IEEE Transactions on Visualization and Computer Graphics},

volume = 28,

number = 1,

pages = {1150--1160},

doi = {10.1109/TVCG.2021.3114824}

}