How Visualizing Inferential Uncertainty Can Mislead Readers About Treatment Effects in Scientific Results

ACM Human Factors in Computing Systems (CHI) 2020 Best Paper Honorable MentionAbstract

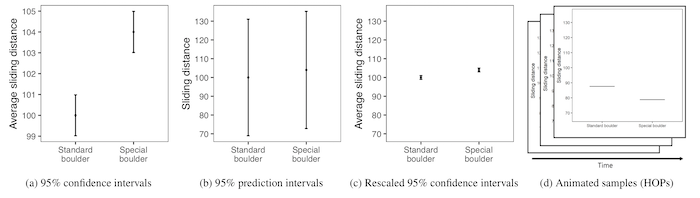

When presenting visualizations of experimental results, scientists often choose to display either inferential uncertainty (e.g., uncertainty in the estimate of a population mean) or outcome uncertainty (e.g., variation of outcomes around that mean) about their estimates. How does this choice impact readers’ beliefs about the size of treatment effects? We investigate this question in two experiments comparing 95% confidence intervals (means and standard errors) to 95% prediction intervals (means and standard deviations). The first experiment finds that participants are willing to pay more for and overestimate the effect of a treatment when shown confidence intervals relative to prediction intervals. The second experiment evaluates how alternative visualizations compare to standard visualizations for different effect sizes. We find that axis rescaling reduces error, but not as well as prediction intervals or animated hypothetical outcome plots (HOPs), and that depicting inferential uncertainty causes participants to underestimate variability in individual outcomes.

Citation

BibTeX

@inproceedings{visualizing-inferential-uncertainty-2020,

title = {How Visualizing Inferential Uncertainty Can Mislead Readers About Treatment Effects in Scientific Results},

author = {Hofman, Jake M. and Goldstein, Daniel G. and Hullman, Jessica},

year = 2020,

booktitle = {Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems},

publisher = {ACM},

series = {CHI '20},

doi = {10.1145/3313831.3376454}

}